AWS recently (Feb. 7) shared a quick and simple solution to automatically STOP and START EC2 and RDS instances. As of now, to achieve this functionality you were forced to use a custom or a 3rd party solution which were neither simple nor cost-effective. This solution is simple, low cost & can be easily deployed in 5 minutes!

P.S. - As per the AWS latest guidelines, if you're using the old EC2 Scheduler then you must migrate to the new AWS Instance Scheduler.

Use Cases

You want to schedule your Dev / Test server instance to run only during the business hours on week days. Per instance basis, this could lead to a savings of up to 70% on your AWS bill (on those instances which are tagged with this solution).

Architecture of the Solution

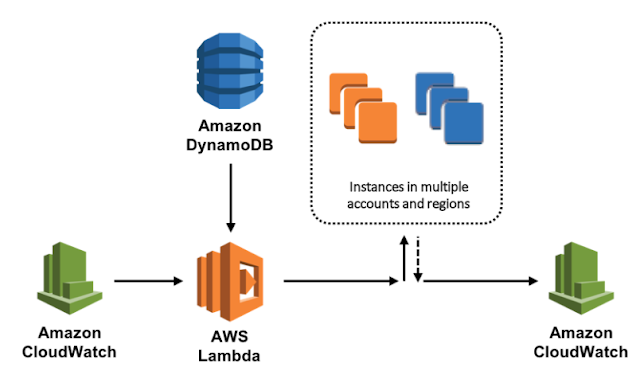

This solution is deployed using a CloudFormation template shared by AWS. The said template deploys a stack with the following 3 components:

Prerequisites

P.S. - As per the AWS latest guidelines, if you're using the old EC2 Scheduler then you must migrate to the new AWS Instance Scheduler.

Use Cases

You want to schedule your Dev / Test server instance to run only during the business hours on week days. Per instance basis, this could lead to a savings of up to 70% on your AWS bill (on those instances which are tagged with this solution).

|

| [Image Credit: AWS website] |

This solution is deployed using a CloudFormation template shared by AWS. The said template deploys a stack with the following 3 components:

- AWS Lambda,

- CloudWatch

- DynamoDB

|

| AWS Instance Scheduler [Image Credit - Amazon AWS website] |

- An Active AWS Account.

- An EC2 (or RDS) instance. We'll use an EC2 instance for this demo.

- Beginner's level knowledge of CloudFormation & DynamoDB is expected but not required as the implementation is mostly abstracted. Only a few clicks & a few configuration changes are needed based on your requirement.

- Sign-in to your AWS account.

- Click on Launch Solution button on this web page --> See Step# 1 of the shared link.

- It'll open CloudFormation --> Select Template page.

- In the above page, Select the Region of your choice. (Select from Top Right, drop-down list). By default it's US East, N. Virginia.

- Leave the default options as is & click Next.

- In this page i.e. - Specify Details - Write the name of your stack in Stack Name text-box. In the same page under Parameters Section - enter your Default Time Zone. You can change this later as well in the DynamoDB table.

- Click Next.

- Now you're in Options page. Click Next.

- You're in Review page. Scroll down & click on the checkbox at the bottom of the page. [The checkbox reads I acknowledge that AWS CloudFormation might create IAM resources].Click Create. Wait for a couple of minutes for the stack to be created.

Linking Your EC2 with the Instance Scheduler

This is a simple step but can prove a bit tricky if you are reading the official documentation. I think, the official documentation is not explicitly mentioning this step in details along with screenshot, the clarity is missing. So here we go.

- Go to Services. Select EC2. Select the instance you want to test for automatic START / STOP. Create a new instance if you already don't have one.

- Select the instance. Go to Tags. Enter Key as Schedule and Value as uk-office-hours.

Now observe your EC2 instance based on the time settings & the Time Zone that you've selected. By default the Time Zone is UTC. If you've not made any changes in DynamoDB tables then the tagged EC2 instance should be in running state only between 9 AM to 5 PM from Monday to Friday. Apparently, in a week your instance will run only 1/3 of the time!

To Change the Schedule / Time / Weekdays

- Go to the DynamoDB service.

- Select the (radio button) table named *-ConfigTable-*.

- Select Items (right pane).

- Select period with name office-hours.

- Make a change to begintime & endtime. Click Save.

You can play around by changing the various parameters in the ConfigTable. Feel free to share your observation in comments.

Happy Cloud Computing!